The Army Futures Command explores manned-unmanned drone teaming / U.S. Army

The Army Futures Command explores manned-unmanned drone teaming / U.S. Army

Hyperwar: How Militarized AI is Transforming the Decision-Making Loop

This week U.N. Secretary General Antonio Guterres convened fresh talks in Geneva to discuss the evolving relationship of humans and machines in contemporary warfare. The proposed changes to the Convention on Certain Conventional Weapons underlines increasing scrutiny of AI-enabled warfare and autonomous weapons systems in the wake of the first suspected supervised autonomous drone strike in Libya earlier this year, when a “fire, forget and find” loitering munitions drone was used in combat.

As AI and other technologies catalyze shifts in 21st century warfare, humans will take a back seat as primary decision-makers in future conflicts. So what will this new AI-powered warfare look like—and what challenges must the U.S. meet to adapt to future engagements and mitigate catastrophic fallout?

Where no machine has gone before

With the advent of big data and deep learning, the third military revolution will enable a “digital synergy” of AI and other breakthrough technologies to collect, process, and make sense of zetabytes of data within seconds. In the decision-centric conflicts of the future, an adaptive mesh of battle networks and kill webs will form a connected “backbone” of commanders, operators, and weapons. Whoever leverages technological infrastructure to reach the best decisions first, wins. This is the final cognitive frontier: but where do humans fit into the puzzle of decisions made in seconds, and battles waged in minutes?

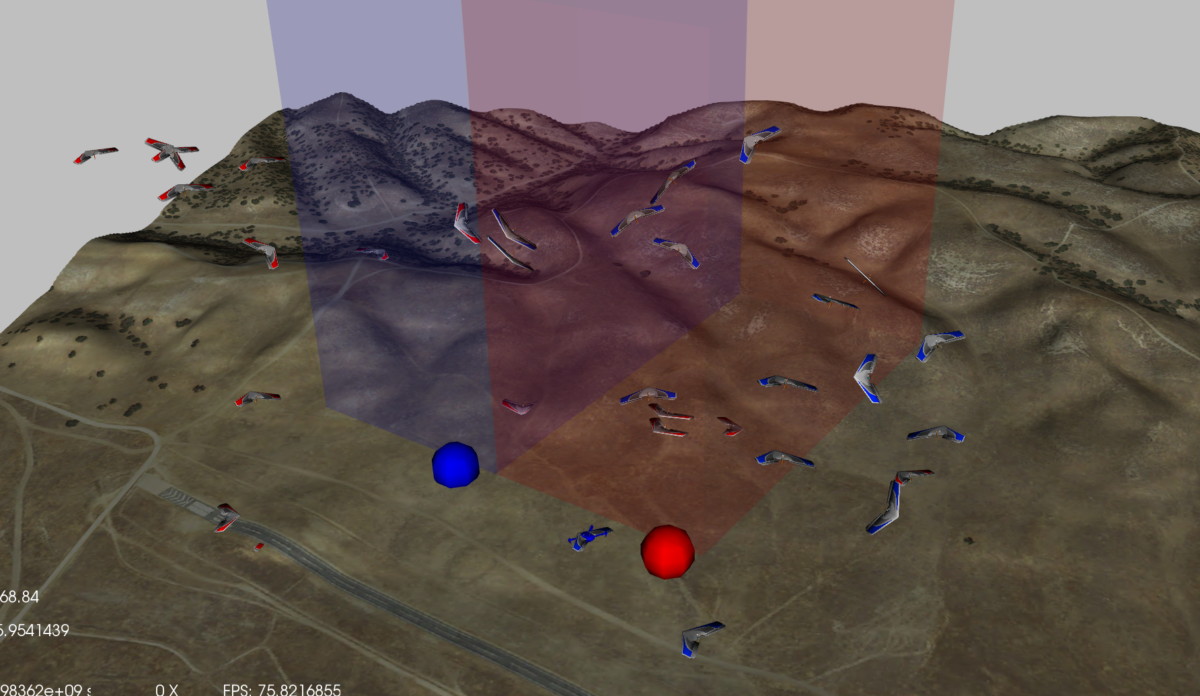

Mosaic warfare is one way AI could enable distributed warfare and adaptable networks of decision-making in a hyperwar scenario / Courtesy DARPA

Hyperwar: Decision-making at warp speed

In their 2017 treatise On Hyperwar, retired USMC Gen. John Allen and SparkCognition founder Amir Husain redefine hyperwar as

“a type of conflict where human decision making is almost entirely absent from the observe-orient-decide (OODA) loop. As a consequence, the time associated with an OODA cycle will be reduced to near-instantaneous responses.”

The OODA loop, a framework for thinking about how decisions are made in theater, stands for Observe, Orient, Decide, and Act. Hyperwar diffuses the traditional OODA loop and expands monolithic decision-making structures into resilient, scalable, adaptable kill webs, with the automated discretion to select, target, and engage with opposing forces faster than their human counterparts. Human loops are vulnerable; digital loops are fast and flexible. While distinctions are not always clear-cut, the OODA loop is often used to help differentiate levels of autonomy into the following categories:

In the loop, AKA semi-autonomous: AI data is used to select targets with a human in command. Case study: The DoD’s controversial Algorithmic Warfare Crossfunctional Team (Project Maven) uses deep learning to analyze UAV imagery and identify ISIS targets, which it passes on to human decision-makers.

On the loop, AKA supervised autonomous: Machines act within predefined parameters to analyze, decide, and act. A human may intervene in or abort decisions. Case study: When operating in autonomous mode, Raytheon’s MK 15 Phalanx Close-In Weapons System selects targets with radar, analyzes their threat level, and independently fires.

Out of the loop, AKA fully autonomous: Machines orient, observe, act, and decide, without human intervention. Case study: Another Raytheon military system, the Low Cost UAV Swarm Technology (LOCUST) leads the charge in offensive drone swarm technology, which can be logistically difficult for human operators to directly control.

In the DARPA Service Academies Swarm Challenge, academy students compete in wargaming simulations to develop innovative UAV swarming tactics / Courtesy DARPA

Hyperwar is premised on a system of systems, and incorporates varying levels of mechanized autonomy to free up logistical constraints, coordinate movements, analyze data, and recognize patterns. Machines think faster and act quicker than humans, adapting to operational time compression in an era where speed and complexity are battlefield commodities. A calculated, modular machine-enabled “decision-making stack” frees up command constraints to allow for higher level abstract problem solving. Leveraged correctly, the ultimate advantage of mechanized military decision-making is an increase in strategic bandwidth. Tactical decisions will be automated—but strategic decisions will remain the domain of humans.

Not today, SKYNET

Humans are not perfect decision-makers, but neither is artificial intelligence. Current technology has a long way to go if it is to be effectively implemented at hyperwar scale. Limitations such as imperfect data collection and training, lack of interoperability, and opaque core programming mean that AIs struggle to discern and differentiate between combatants in the fog of war. Amplification of bias, incorrect target selection, and potential conflict escalation are all consequences of premature, uncoordinated, and untested AI systems. Rigorous realistic wargaming and testing must vet autonomous systems to build a foundation of trust if the U.S. is to remain competitive.

The final round of AlphaDog dogfight simulation, where AI (Heron) beat out a human F-16 operator (Banger) / Courtesy DARPA

For now, fears of self-aware artificial intelligence are misplaced. Though current AI can defeat humans in complex board games, or even beat an F-16 fighter pilot in a simulated dogfight, the ability of the human brain to solve diverse problem sets and adapt to new environments is still out of reach, and may remain so for decades to come. With Russia and China creeping up on long-held U.S. techno-military advantages, SKYNET is an unrealistic concern: “So don’t worry about being evil,” says Defense One editor Patrick Tucker: “Worry about it being fast and stupid.”

No pAIn, no gAIn

The great strengths of AI can also become great weaknesses when the power of machines is harnessed incorrectly, or negligently, or even maliciously. While data collection, machine learning, and battle networks are still far from reliable implementation in the field, the potential exists. Conventions similar to those held this week must preclude the possibility of AI-escalated conflict. Seismic shifts in technology, warfare, and the world are coming, whether the U.S. is ready for them or not. Decisions may be made in seconds, battles decided in minutes. In the words of the National Security Commission on Artificial Intelligence: “AI is going to reorganize the world. America must lead the charge.”